In 2012, I was alarmed to read about Galvanic Skin Response or GSR bracelets that measured students’ emotions and engagement in classrooms in the United States. How would the sensors record a child who looked out to watch a bird? Would they think she was excited, distracted, or bored?

More disturbingly the sensors had been meant to evaluate teachers’ performance. The Gates Foundation had given $ 1.4 million to a university under its ‘Measures of Effective Teaching’ programme, but retracted saying it was to improve students’ learning. The foundation explained that the biometric electrodes of the bracelet send a small current across the skin and measure changes in electrical charges as the sympathetic nervous system responds to stimuli. It hoped this would serve as a ‘simple classroom tool’, enabling teachers to see ‘which kids are tuned in and which are zoned out.’

There were strong protests from teachers and students.

One teacher said Bill Gates continued to demean and insult the teaching profession, which he knew nothing about. There were various suggestions on ways to get higher ratings on the sensors. One teacher said she could randomly scream at a student to keep everyone on high anxiety, while students said soft porn may work better.

Diane Ravitch, a professor at New York University and former Assistant Secretary of Education, wrote about this on her blog: ‘There is reason to be concerned about the degree of wisdom – or lack thereof – that informs the decisions of the world’s richest and most powerful foundations. And yes, we must worry about what part of our humanity is inviolable… what part of our humanity is off-limits to those who wish to quantify our experience and use it for their own purposes, be it marketing or teacher evaluation.’

She warned that this aligned with the US Department of Education’s huge investment in ‘data warehouses’, which would amass children’s vital statistics from birth to everything thereafter.

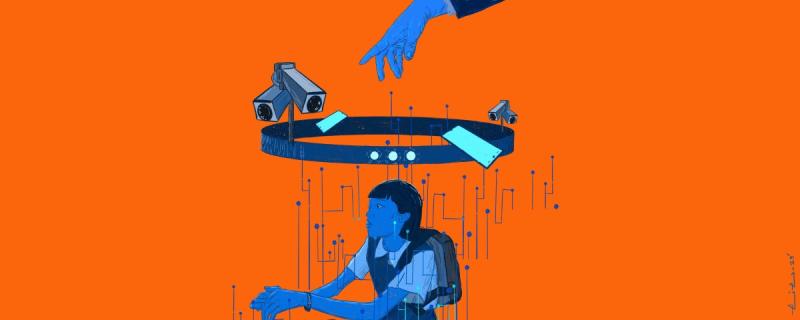

Indeed, Shoshana Zuboff worries if toddlers will now need to be given early lessons in paranoia and anxiety, to ensure their protection against being devastatingly ‘datafied’. Children are subjected to relentless monitoring, with images and data voluntarily made public by parents, caretakers or health providers. Thus, wearables or apps linked to their beds, even toys or clothing, along with massive datasets of the education system, have actually morphed the ecosystem of external cameras and CCTV surveillance, into one of invasive dataveillance.

The Age of Surveillance Capitalism, as Zuboff shows, is the new economic order where all human experience is considered as free raw material that can be used secretively for commercial extraction, and for predicting, nudging and also modifying human behaviour for others’ profit. Without regulations on the mining and selling of personal data, children are the most vulnerable, with their intimate toys or ‘smart home’ devices constantly spying on them.

When in 2017 the toy company Mattel, known for Barbie dolls, hired its CEO from Google, analysts saw it as a shift from ‘making great products for you to collecting great data about you.’ The same year the CEO of Roomba, a ‘smart’ vacuum cleaner with functions to map homes had announced revenue creation by selling floor plans. When some customers protested about data privacy they were allowed to opt out, but with reduced service and functionality.

A market for emotions and captive classrooms

Emotion analytics is a substantially profitable form of dataveillance, which trains its software on faces, voices, bodies, and brains, to amass and measure intimate behaviours too subtle to be even noticed by humans. According to Zuboff, predictive behavioural data, of emotions and intentions, is the unknown knowledge about us that lends power over us, and ‘automates information flows about us to automate us.’ She warns that as industrial civilisation threatens to cost us the earth at the expense of mining nature, an information civilisation of surveillance capitalism will threaten to cost us our humanity, by mining human nature.

Microsoft had filed a patent application in 2008 to monitor employee metabolism through GSR, brains signals, facial expressions, etc., but perhaps children in classrooms offered a more captive audience. A rush for measuring emotions had followed Rosalind Picard’s work on Affective Computing at the MIT Media Lab in Massachusetts, Cambridge. While the conscious mind is aware about some emotions which can be expressed in words (for instance, ‘I am scared’), some emotions remain hidden. These could get unconsciously expressed – as a momentary widening of the eyes, a fleeting contraction of the facial muscles, or as beads of sweat. She rendered conscious and unconscious emotion as observable behaviour, for coding and calculating.

Like most technological research that is initially pitched for an ostensibly innocent medical purpose, Picard sought ‘wearable computers’ for autistic children. She envisaged learners would have control over data, but still warned of the dystopian possibility of governments manipulating the emotions of large populations.

However, within three years of founding the company Affectiva with a research protege, she was pushed out of it, by commercial pressures from advertising and digital surveillance. The company’s nuanced analysis of the micro-expressions of customers watching advertisements had set the market on fire, because never had face-to-face surveys elicited such ‘articulate’ responses from people. Her protege, buoyed by the prospects of an ‘emotion economy’, even called for an ‘emotion chip’ implanted in bodies, to modify our emotions and offer ‘happiness as a service’ – since happy customers buy more.

Appropriately titled ‘A Market for Emotions’, a 2014 MIT education news report today reads more like a dystopian advertisement.

The Affectiva co-founder touts a grandiose vision of a ‘mood-aware’ internet – which through connected websites and apps, would be like walking into a large outlet store with sales attendants reading physical cues to assess what to approach you with. The report brandishes the advanced emotion tracking software, based on years of research at MIT Media Lab, backed by $20 million in funding from big name clients Kellogg and Unilever. The report says that Affectiva had amassed the largest facial coding dataset in the world, of 1.7 million facial expressions, from people of different races, across 70 different countries, to train its algorithms to discern expressions from all face types and skin colours, and even moving faces, in all types of lighting. Of course, justification for this research is sought from the pilot work for online learning, that captures facial engagement data – if a student is bored, frustrated, or focused – to predict ‘learning outcomes’.

Similar research conducted by the Laboratory of Cognitive Neuroscience and Learning at Beijing Normal University claims to improve pedagogy.

A video of this study shows children wearing headbands with colour coded lights, to inform the teacher when they are not attentive, while parents are informed of the time students were attentive or distracted. There are serious concerns about the ethics of such applications, the ‘evidence’ they are assumed to provide about learning, and the detrimental impacts of technology on young minds.

The pedagogy of neuroscience analytics is driven by commercial agendas and neoliberal perspectives. Like using an input-output model, even measuring teachers’ efficiency with devices on children’s’ bodies. Teaching is not transmitting knowledge, capturing students’ attention, or limited to stimulating the brain. Social constructivist perspectives of learning show us that knowledge is constructed by learners, not as solitary individuals, but through a social process. For learning and understanding to happen, students must be engaged in dialogue, thinking, disagreeing, reflecting and connecting new ideas with their diverse lived experiences.

A pandemic driven push for digital education, as part of disaster capitalism, has completely ignored educational appraisal of technologies with deleterious impact on children. The education technology (ed-tech) market has been known to amplify supremacist assumptions and biases of a largely white male industry, about learning and children. So are the algorithms, which are responsible for the ‘adaptive’ nature of online education. These compile massive datasets on every child’s interactions – each pause, doubtful or unconscious press of a button, every considered action taken – in order to customise or ‘personalise’ the digital platform and offer the next piece of instruction accordingly.

Some children manage to crack and ‘outsmart’ the algorithms. One girl perceptively noted that when she deliberately leaves her worksheets blank, the ones she gets next are much easier! However, my discussions with some computer engineers planning start-ups for digital education using artificial intelligence, have been disconcerting. With no understanding of how children learn, or the implications of ‘datafying’ them, they showed an unquestioned belief in their technologies coupled with naive pompous claims of ‘democratising’ education for the poorest.

There has been unqualified support from the National Education Policy (NEP) 2020 and Niti Aayog, for increased use of artificial intelligence, digital platforms, machine learning and adaptive assessment of students. Moreover, in 2022 state governments such as Andhra Pradesh and Maharashtra have signed MoUs with the online provider Byju’s to ‘modernise’ their school curricula.

Delhi government dismisses concerns on data privacy

Data privacy scholars call for sustained work in schools on critical digital literacy for students and parents. However, most school systems in India deny issues of data privacy, themselves mining, using or handing over children’s data to an undisclosed third party.

The Delhi government uses the lame rhetoric of ‘children do nothing private here’ when questioned about their privacy in its invasive regime of cameras and closed circuit televisions (CCTVs) in schools. It has tried to entice poor parents by offering live streaming of classrooms to their mobile phones, and asked them to sign consent forms.

It shifts some responsibility on to them for ensuring the safety of their children, while also inviting them to collude as snooping allies – Big Brother, Father and Mother – to invade the trusting pedagogical space of classrooms. Teachers are increasingly under the radar, through mandatory location tracking apps, biometric attendance through tagged selfies, or ‘live snooping’ by parents.

Significantly, in 2019, before the launch of a facial recognition app on Teachers’ Day, the Gujarat State Teachers’ Association passed a resolution to forbid teachers from downloading the Microsoft Kaizala app with which they were directed to take a selfie to mark their attendance and location.

The Delhi government has taken recourse to heightened control through surveillance, and segregation of children into ‘ability’ groups with differentiated examinations. Its education managerial advisers, namely, Pratham, J-PAL and Central Square Foundation, give a name to this differentiation and discrimination of children as ‘Teaching at the Right Level’, which has been contested in court by concerned parents. Principles of humanist education and the Right to Education Act do not allow such labelling and assigning of levels with differentiated examinations.

More problematically, well before the board examinations, the Delhi government ruthlessly pushes out large numbers of ‘disposable’ children of ‘low ability’ into the Open School in an ostensible effort to flaunt lavish advertisements on its board results. Even children getting a ‘compartment’ allowing them to immediately reappear in the supplementary examination, are forced to join the Open School.

I had accompanied some students wanting to come back to their school in Class 11, after having been sent to the Open School and having passed Class 10 on their own. The principal dismissed them, and asked me to call a vocational training centre, saying such students are not expected to waste their time studying further. Teachers who protest about such unjust discriminatory practices of exclusion are severely punished.

However, senior teachers have continued to write about these concerns on an anonymous blog or in an open letter to a minister. According to them, despite a court order, schools have collected a host of personal data of parents and children, including voter cards, Aadhaar card, mobile numbers, educational qualifications, ownership, or tenancy details of the house, without norms on third party sharing.

Children’s data is used for political propaganda, such as personalised messages by the chief minister or education minister, in the guise of wishes for a birthday or examination, promoting the party and eliciting electoral support.

A court plea for the protection of the privacy of children and teachers was summarily dismissed. Teachers contend that while the invidious tracking of children is done in the name of accountability and transparency, it has ironically become next to impossible for research scholars, journalists or concerned individuals to visit and report rigorously and truthfully on schools. Teachers also observe how, ironically, to countervail the surveillance, precious time and funds are spent in showcasing activities like the Happiness or Entrepreneurship Mindset Curriculum. Indeed, happiness should be integral to a trusting inclusive environment of all classrooms, where students learn about and from each other, in mixed ability groups.

Increasing use of digital data at the system level (large-scale assessments, student databases) and at school level (attendance, assessments, online adaptive learning) raises several ethical issues. Education systems view learners as disposable consumers responsible for their own learning trajectory, and for the risks involved. Studies on data privacy in the UK have reported concerns about digital dossiers of children that can remain permanently in the public domain and affect future life opportunities in education, health, employment, or access to financial credit.

Questioning the system’s assumptions that parents can take care of their children’s data privacy, researchers record that parents usually trust schools; they might only object to interpersonal information about their children’s behaviour being put out. Children though aware look at privacy only in interpersonal terms, about cyberbullying, sexting or not giving away too much personal information to strangers. Even informed parents think of institutional data gathering as anonymous ‘big data’ somewhere in a cloud, which is not a threat to immediate relational privacy. They also think of commercial data given by them, say, for online purchase, to be used only for service purposes; most are unaware of opaque data traces or ‘inferred’ data, being taken of them.

William Davies in his book, The Happiness Industry, argues that the word ‘data’ from ‘datum’ in Latin, meaning ‘that which is given’, has become ‘an outrageous lie.’ Most data are secretively taken by experiments, through the power inequalities of surveillance, or through misleading lotteries and rewards. Whereas social sciences seek data under specified terms, subject to constant negotiation and reflection, in behavioural sciences data is extracted by a powerfully opaque system of watching, measuring and controlling people. He argues that behavioural and emotional sciences can be dangerous for democracy, which depends on peoples’ conscious voice; these sciences use our unconscious data as ‘inner truth’ but disparage our conscious opinions and criticisms as unreliable.

Datafication and the larger ‘neuroliberal’ agenda

The documentary Social Dilemma poignantly depicts how datafication of young users of social media in search of friends, impacts their brains and sucks them into a vicious cycle of egocentric exhibitionism and grandiose behaviour. Young brains ‘rewired’ in an algorithmic ‘unsocial’ regime, made to depend on armies of virtual ‘friends’ for an external sense of self-hood, live with an instrumental idea of relationships. Nudged to keep changing their ‘roster’ of relationships, un-friending or un-following those not providing enough ‘likes’ for the ‘high’ they get addicted to, they find themselves desolate and insecure.

Susan Greenfield the eminent neuroscientist, explains that Mind Change is as unprecedented and catastrophic as climate change.

The brain of young ‘digital natives’, within different cultural contexts, is adapting to the cyber world in ways where our basic human frailties are getting exaggerated, without the evolutionary constraints and checks provided by close interpersonal relationships. The absence of body-language and emotions – of being seen fumbling or felt with damp hands – abandons checks on self-disclosure, and without developing a sense of privacy with close friends, can lead to reckless attention seeking and obsessive narcissism.

This results in a lack of empathy and understanding of others’ emotions – usually a complex process that can take the first 20 years of a persons’ life – and can create nasty crafty online selves. Indeed, the relentless and unrealistic barrage of personal information others exhibit on digital networks, creates a culture where snooping is a heady part of relating with others, but can demolish the self-esteem and nascent identities of teenagers.

Social-emotional learning (SEL), is part of the gamut of new techno-solutions touted in education and neuroscience, positing happiness and mindfulness as essential strategies of individual learners, deliberately deflecting attention from systemic issues. Research is hugely funded by corporate foundations, for-profit providers, and supported by organisations like UNESCO.

The Mahatma Gandhi Institute for Education and Peace for Sustainable Development (MGIEP) was established in Delhi, as a Category-1 Institute of UNESCO, majorly funded by the government of India. It was envisioned to follow Gandhian values of humanism, peace and sustainable development.

However, Edward A. Vickers and other scholars associated with it have noted that antipathetic to its foundational legacy, led by its director Anantha Kumar Duraiappah, MGEIP has even rebranded and politically hollowed out Gandhi as a holographic agent selling its ‘neuroliberal’ digital agenda. One of its publications on ‘Rewiring the brain to be future-ready’ has the cover showing a memory chip being inserted in a child’s head, inscribed with words like critical inquiry, mindfulness, and empathy. Recently in collaboration with the government of Karnataka, MGEIP announced an International Hub for Education in the Mixed Reality (IHEM). Indeed, questions need to be raised about substantial national public funds for children’s education being diverted to these questionable and potentially damaging areas of intervention.

In the Indian context, it is crucial to recognise that ‘digital bodies’ (images, information and biometric data) of poor children are at greater risk of being used as bio-capital for large datasets, globally sold for profit. There are serious concerns of dataveillance becoming a central motive of international aid for health and education to the Global South, where vulnerable children can become objects of sympathy for humanitarian intervention. Funding agencies pitch these as technical innovations for accelerating digitisation of beneficiary bodies, for accuracy of digital records in rural locations, with no attention to issues of social justice or collective action.

Kristin Sandvik, a scholar of law and ethics in aid, has analysed ‘Khushi Baby’, a digital necklace for infants, promoted with prestigious innovation awards by UNICEF and other international organisations, influential universities, international media and corporate foundations. Developed at Yale University, it was trialled in rural Udaipur and modified for enlarged technical dataveillance of infants, mothers, care givers, and health workers, also in other countries. A host of advanced technologies added on, has morphed it into a huge system of surveillance with facial biometrics – for tracking child and maternal health, attendance of health workers, data about chronic diseases, and even ‘conditional cash transfers, ration cards, emergency medical response and hospital re-admissions’.

Concerns have been raised recently on the weaponisation of digital health records by the police making sweeping arrests in Assam for child marriage. The unequal power dynamics of these technologies and agencies intruding on children’s bodies, exacerbate the dangers of data colonialism and the lack of data privacy. There is an urgent need to stop this mining, disposing and morphing of little selves, in the name of education, health or digitisation. Sandvik questions the ethics of unpaid labour of the tiny wearer carrying the device for free, while the archives capitalise on its enormous commercial value. Curiously, the technological innovation revels in its capitalising of people’s lack of knowledge, with the savvy description of ‘a culturally tailored piece of jewellery’ where the black string of the pendant is believed by many tribal communities of India ‘to ward off evil spirits’.

(Anita Rampal taught at the Faculty of Education, Delhi University. Courtesy: The Wire and Seminar 765.)